-

I would say the MAIN difference is that a binary search tree is sorted. When you insert into a binary search tree, where those elements end up being stored in memory is a function of their value. With a linked list, elements are blindly added to the list regardless of their value.

Right away you can some trade offs:

Linked lists preserve insertion order and inserting is less expensive

Binary search trees are generally quicker to search

-

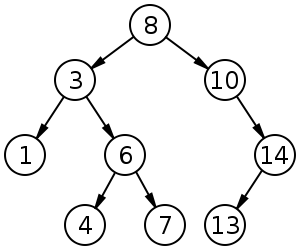

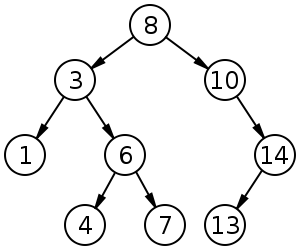

In computer science, a binary search tree (BST) is a binary tree data structure which has the following properties:

- each node (item in the tree) has a distinct value;

- both the left and right subtrees must also be binary search trees;

- the left subtree of a node contains only values less than the node's value;

- the right subtree of a node contains only values greater than or equal to the node's value.

In computer science, a linked list is one of the fundamental data structures, and can be used to implement other data structures.

So a Binary Search tree is an abstract concept that may be implemented with a linked list or an array. While the linked list is a fundamental data structure.

-

Linked lists and BSTs don't really have much in common, except that they're both data structures that act as containers. Linked lists basically allow you to insert and remove elements efficiently at any location in the list, while maintaining the ordering of the list. This list is implemented using pointers from one element to the next (and often the previous).

A binary search tree on the other hand is a data structure of a higher abstraction (i.e. it's not specified how this is implemented internally) that allows for efficient searches (i.e. in order to find a specific element you don't have to look at all the elements.

Notice that a linked list can be thought of as a degenerated binary tree, i.e. a tree where all nodes only have one child.

-

Linked List:

Item(1) -> Item(2) -> Item(3) -> Item(4) -> Item(5) -> Item(6) -> Item(7)

Binary tree:

Node(1)

/

Node(2)

/ \

/ Node(3)

RootNode(4)

\ Node(5)

\ /

Node(6)

\

Node(7)

In a linked list, the items are linked together through a single next pointer.

In a binary tree, each node can have 0, 1 or 2 subnodes, where (in case of a binary search tree) the key of the left node is lesser than the key of the node and the key of the right node is more than the node. As long as the tree is balanced, the searchpath to each item is a lot shorter than that in a linked list.

Searchpaths:

------ ------ ------

key List Tree

------ ------ ------

1 1 3

2 2 2

3 3 3

4 4 1

5 5 3

6 6 2

7 7 3

------ ------ ------

avg 4 2.43

------ ------ ------

By larger structures the average search path becomes significant smaller:

------ ------ ------

items List Tree

------ ------ ------

1 1 1

3 2 1.67

7 4 2.43

15 8 3.29

31 16 4.16

63 32 5.09

------ ------ ------

-

A binary search tree can be implemented in any fashion, it doesn't need to use a linked list.

A linked list is simply a structure which contains nodes and pointers/references to other nodes inside a node. Given the head node of a list, you may browse to any other node in a linked list. Doubly-linked lists have two pointers/references: the normal reference to the next node, but also a reference to the previous node. If the last node in a doubly-linked list references the first node in the list as the next node, and the first node references the last node as its previous node, it is said to be a circular list.

A binary search tree is a tree that splits up its input into two roughly-equal halves based on a binary search comparison algorithm. Thus, it only needs a very few searches to find an element. For instance, if you had a tree with 1-10 and you needed to search for three, first the element at the top would be checked, probably a 5 or 6. Three would be less than that, so only the first half of the tree would then be checked. If the next value is 3, you have it, otherwise, a comparison is done, etc, until either it is not found or its data is returned. Thus the tree is fast for lookup, but not nessecarily fast for insertion or deletion. These are very rough descriptions.

Linked List from wikipedia, and Binary Search Tree, also from wikipedia.

-

A linked list is just that... a list. It's linear; each node has a reference to the next node (and the previous, if you're talking of a doubly-linked list). A tree branches---each node has a reference to various child nodes. A binary tree is a special case in which each node has only two children. Thus, in a linked list, each node has a previous node and a next node, and in a binary tree, a node has a left child, right child, and parent.

These relationships may be bi-directional or uni-directional, depending on how you need to be able to traverse the structure.

-

It's actually pretty simple. A linked list is just a bunch of items chained together, in no particular order. You can think of it as a really skinny tree that never branches:

1 -> 2 -> 5 -> 3 -> 9 -> 12 -> |i. (that last is an ascii-art attempt at a terminating null)

A Binary Search Tree is different in 2 ways: the binary part means that each node has 2 children, not one, and the search part means that those children are arranged to speed up searches - only smaller items to the left, and only larger ones to the right:

5

/ \

3 9

/ \ \

1 2 12

9 has no left child, and 1, 2, and 12 are "leaves" - they have no branches.

Make sense?

For most "lookup" kinds of uses, a BST is better. But for just "keeping a list of things to deal with later First-In-First-Out or Last-In-First-Out" kinds of things, a linked list might work well.

-

Linked List is straight Linear data with adjacent nodes connected with each other e.g. A->B->C. You can consider it as a straight fence.

BST is a hierarchical structure just like a tree with the main trunk connected to branches and those branches in-turn connected to other branches and so on. The "Binary" word here means each branch is connected to a maximum of two branches.

You use linked list to represent straight data only with each item connected to a maximum of one item; whereas you can use BST to connect an item to two items. You can use BST to represent a data such as family tree, but that'll become n-ary search tree as there can be more than two children to each person.

-

They are totally different data structures.

A linked list is a sequence of element where each element is linked to the next one, and in the case of a doubly linked list, the previous one.

A binary search tree is something totally different. It has a root node, the root node has up to two child nodes, and each child node can have up to two child notes etc etc. It is a pretty clever data structure, but it would be somewhat tedious to explain it here. Check out the Wikipedia artcle on it.

-

The issue with a linked list is searching within it (whether for retrieval or insert).

For a single-linked list, you have to start at the head and search sequentially to find the desired element. To avoid the need to scan the whole list, you need additional references to nodes within the list, in which case, it's no longer a simple linked list.

A binary tree allows for more rapid searching and insertion by being inherently sorted and navigable.

An alternative that I've used successfully in the past is a SkipList. This provides something akin to a linked list but with extra references to allow search performance comparable to a binary tree.

-

A linked list is a sequential number of "nodes" linked to each other, ie:

public class LinkedListNode

{

Object Data;

LinkedListNode NextNode;

}

A Binary Search Tree uses a similar node structure, but instead of linking to the next node, it links to two child nodes:

public class BSTNode

{

Object Data

BSTNode LeftNode;

BSTNode RightNode;

}

By following specific rules when adding new nodes to a BST, you can create a data structure that is very fast to traverse. Other answers here have detailed these rules, I just wanted to show at the code level the difference between node classes.

It is important to note that if you insert sorted data into a BST, you'll end up with a linked list, and you lose the advantage of using a tree.

Because of this, a linkedList is an O(N) traversal data structure, while a BST is a O(N) traversal data structure in the worst case, and a O(log N) in the best case.

-

A Binary Search Tree is a binary tree in which each internal node x stores an element such that the element stored in the left subtree of x are less than or equal to x and elements stored in the right subtree of x are greater than or equal to x.

Now a Linked List consists of a sequence of nodes, each containing arbitrary values and one or two references pointing to the next and/or previous nodes.

-

They do have similarities, but the main difference is that a Binary Search Tree is designed to support efficient searching for an element, or "key".

A binary search tree, like a doubly-linked list, points to two other elements in the structure. However, when adding elements to the structure, rather than just appending them to the end of the list, the binary tree is reorganized so that elements linked to the "left" node are less than the current node and elements linked to the "right" node are greater than the current node.

In a simple implementation, the new element is compared to the first element of the structure (the root of the tree). If it's less, the "left" branch is taken, otherwise the "right" branch is examined. This continues with each node, until a branch is found to be empty; the new element fills that position.

With this simple approach, if elements are added in order, you end up with a linked list (with the same performance). Different algorithms exist for maintaining some measure of balance in the tree, by rearranging nodes. For example, AVL trees do the most work to keep the tree as balanced as possible, giving the best search times. Red-black trees don't keep the tree as balanced, resulting in slightly slower searches, but do less work on average as keys are inserted or removed.